Handling latency in data acquisition systems

Introduction

In recent projects, I found myself working with data acquisition systems. For instance:

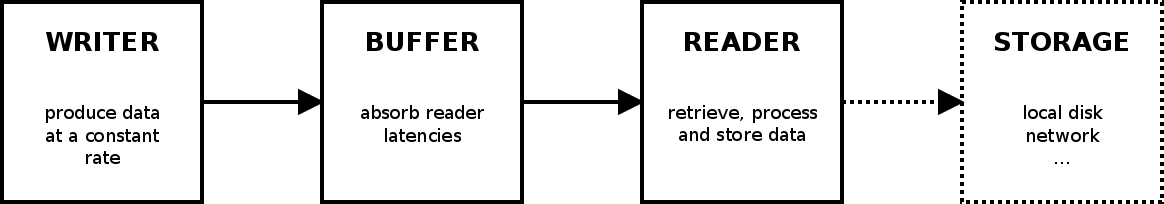

While they differ in purpose and performances, they share a similar setup: a point to point topology where a writer produces data that are consumed by a reader:

The writer usually has a piece of dedicated hardware to perform data transfer. Its data production rate is constant and dictated by the nature of the physics involved.

On the other side, the reader is typically a software program that processes and stores the received data for its job to be considered done. It must do so while sharing the computing and IO resources with other programs. That is, the reading side is prone to perturbations that will largely affect its performances. These perturbations must be understood and quantified during the data acquisition system design.

Latency

In an ideal system, a reader would consume data at a constant rate. In practice, the reader is interrupted by different events, which reduce its data read time. For our purposes, we refer to this lost time as latency. Put differently, it is the time which is excluded from the data rate. While there are other definitions of latency, it fits well so we will stick to it.

Note that the writer may also be prone to latencies, but they are usually included in the data rate due to the deterministic nature of the writer. So we only consider reader latencies.

Latency is hard to quantify and is composed of different terms. The idea is to identify suspects well known to computer system designers. In general, they can not be exactly quantified but worst case estimations are simple to make. By adding their individual contributions plus a security margin or factor to that, you obtain the latency. I have 2 complementary approaches for that:

- figure out the system activity over one particular second,

- do actual measures with a loaded system. On Linux, you can start here .

In a typical resource shared computer system, latencies result from the IO, operating system and other process activities that preempt the reader process. The following identify reasons and provides tricks to reduce them:

- the operating system scheduler is periodically executed, even if there is nothing to schedule. If your system support tickless operations, enable it. Linux does through the CONFIG_NO_HZ option,

- complete any pending event that may occur during the acquisition. For instance, flush filesystem buffers. In Linux, refer to sync an fsync,

- remove or disable any device not needed for the task (network interfaces ...),

- pre allocate and lock the memory that is used by the reader code. This prevents the system page allocator code to do costing lookups. Also, a good idea is to access this memory a first time, so that no page fault will occur when processing the acquired data. In Linux, refer to mlock and mmap,

- if the system allows it, make your process priority higher than other processes, and eventually higher than IOs themselves. In Linux, refer to sched_setscheduler,

- use multicore architectures to distribute the system load.

Buffering

Data that are produced when the reader code is preempted must be buffered, or they are lost. There are different ways to implement buffering. While more complex implementations exist, a common one is a single, statically sized, FIFO queue like structure which is backed in the process memory.

Any data structure has a cost to manage and thus, also induces latencies. Thus, it is important for this structure and the associated code to be optimized.

Also, choosing the right size for a buffer is critical. Too small and you will lose data, too large and you loose money. It depends both on data rates, latency and acquisition time. A usual strategy is to allocate a buffer 'as large as possible' (ie. choosing the latest DDR component). It will surely work, but some simple computations allow to approximate an actual size. First, let define the following quantities:

- R and W, the reader and writer data rate, in bytes per second,

- T, the acquisition time, in seconds,

- L, the reader latency normalized over T, so its unit is seconds per second,

- B the buffer size in bytes.

During the acquisition, the writer produces the quantity:

W * T

And the reader consumes the quantity:

R * (T * (1 - L))

Then, there are 2 cases when choosing the buffer size. The first one is when the reader is faster than the writer:

R * (T * (1 - L)) > W * T

This is a continuous acquisition system, ie. one where data can be consumed indefinitely without loss. In this case, the buffer size should be chosen such as it can absorb the worst case latency:

B >= W * (1 - L)

The second case is when the reader is slower than the writer, either due to a slower data rate or latency:

R * (T * (1 - L)) <= W * T

When the difference between the quantities produced and consumed is greater or equal to the buffer size, then data start being lost. Thus, we want:

B >= W * T - R * (T * (1 - L))

That is:

B >= T * (W - (R * (1 - L)))

We may also be interested in how long an acqusition can last with a given buffer size. Reordering the equation above gives:

T <= B / (W - (R * (1 - L)))

For instance, if we have a 150MB/s writer, a 100MB/s reader, a latency of 0.3s/s, and we want an acquisition time of 5s:

B = 5 * (150 - (100 * (1 - 0.3)))

B = 400MB

Also, if we have a buffer of 128MB, the maximumm acquisition time is:

T = 128 / (150 - (100 * (1 - 0.3)))

T = 1.6s

- Comments

- Write a Comment Select to add a comment

To post reply to a comment, click on the 'reply' button attached to each comment. To post a new comment (not a reply to a comment) check out the 'Write a Comment' tab at the top of the comments.

Please login (on the right) if you already have an account on this platform.

Otherwise, please use this form to register (free) an join one of the largest online community for Electrical/Embedded/DSP/FPGA/ML engineers: