In this article we’re going to take a look at cycle time, queues, and inventory. Cycle time is a manufacturing term — for anything, not just semiconductors — meaning how long it takes for an individual product to make its way through a manufacturing process, from start to finish. We’re going to try to understand how long it takes to manufacture semiconductors. In particular, we’re going to try to answer these questions:

- How long does it take for a wafer to make its way through the fab? And how long after that to get finished ICs?

- What kinds of steps make up most of the cycle time in a wafer fab?

- What factors influence fab cycle time?

- Is there a tradeoff between cycle time and throughput?

- What strategy do semiconductor fabs use to maximize their throughput, given a limited capital expenditure budget?

In Part Four we went through a recap of how the chip shortage has been doing in 2022, and looked at some dismal predictions of how long it may take for the shortage to end. Certain segments of the semiconductor market, like DRAM and flash memory, microprocessors, and other ICs for consumer electronics, have recovered from supply constraints, and are in a glut due to decreased demand and increases in capacity. Other segments, like industrial and automotive components, still have long lead times and strong demand that seems near-impossible to outpace; their manufacturing processes are the so-called “mature nodes” (40nm - 250nm, more or less) for which foundries have the lion’s share of production but essentially no interest in expanding capacity. It’s a catch-22: the production cost is cheap because the equipment is depreciated, but putting new equipment into use can’t compete with that cost until after it has been depreciated. Adding more mature-node capacity would require enormous capital expense and serious assurance of long-term utilization — and until someone volunteers to cover the cost and risk, the foundries aren’t rushing in to do so themselves. Meanwhile, the automotive market is seeing increasing semiconductor content in each vehicle, with electric vehicles (EV) and advanced driver assistance systems (ADAS) as long-term trends that are fueling demand.

But today we’re going to get back to technical topics, and talk about cycle time.

Cycle time in manufacturing is a necessary but undesirable delay. Semiconductor fab cycle time, in particular is long, measured in weeks or even months. Longer than you might think, longer than it could be, but about as long as it should be. While in the fab, wafers spend the majority of their time waiting in front of machinery. Yes, that’s right, just sitting there, waiting. I’ll explain why this is the case, and why you already know the reasons from everyday life experiences with queues.

I’ll also explain why delays have contributed to the chip shortage, in Part Six, but for now just take it on faith: Delays = Bad. Low Cycle Time = Good. Semiconductor fabs work extremely hard to manage cycle time; this continues to be a hot topic in the field of operations research.

Note that I said manage cycle time, not minimize cycle time. There’s a very easy way to reduce cycle time to near-minimum, but you wouldn’t like it, because it involves lowering the production rate.

For the most part I will be covering the “front-end” manufacturing process — that is, the steps in a semiconductor fab clean room, from blank wafers to finished wafers. There’s also the “back-end” process of taking a wafer, sawing it into individual die, assembling each die into a package, and testing the finished components. I don’t know too much about the back-end steps, but some of the same principles apply.

Disclaimers

Usual disclaimers:

I am not an expert in operations research. I am also not directly involved in the semiconductor manufacturing process. So take my “wisdom” with a grain of salt. I have made reasonable attempts to understand some of the nuances of the semiconductor industry that are relevant to the chip shortage, but I expect that understanding is imperfect. At any rate, I would appreciate any feedback to correct errors in this series of articles.

Though I work for Microchip Technology, Inc. as an application engineer, the views and opinions expressed in this article are my own and are not representative of my employer. Furthermore, although from time to time I do have some exposure to internal financial and operations details of a semiconductor manufacturer, that exposure is minimal and outside my normal job responsibilities, and I have taken great care in this article not to reveal what little proprietary business information I do know. Any specific financial or strategic information I mention about Microchip in these articles is specifically cited from public financial statements or press releases.

Inventory

I want to start with a short tangent about inventory.

One thing that struck me during the worst months of the chip shortage was the inventory reported by different companies. Namely, it wasn’t zero. Or even close to zero.

Many semiconductor companies report days of inventory, either in their quarterly financials or in the earnings calls, and the kind of numbers they were reporting were typically in the 90 - 120 day range. Here are days of inventory for eight of the nine major automotive/industrial semiconductor companies (Analog Devices, Diodes Inc., Infineon, Microchip Technology, NXP, ON Semi, STMicroelectronics, Texas Instruments) over the last three years:

Sure, you can see the dip in 2021 and 2022, but none of them drops below 75 days. That’s two-and-a-half months worth of products! Then why did we have a chip shortage?!

There’s a catch here. We have to define inventory a little more carefully. I talked about automotive inventory in Part Four, with the idea that it was cars and trucks sitting in various places ready to be sold. In semiconductors, perhaps the idea of inventory evokes a picture of warehouses full of chips sitting on shelves. But if you listen to earnings calls, things can get a little blurry, depending on what the speaker is talking about. Here’s Texas Instruments’ Rafael Lizardi during TI’s January 2023 earnings call,[1] in response to a question about how long it will take to balance out inventory:

Yeah, so let me take that. And first, big picture, let me point you to our scorecard, the one that we used for capital management when we talked about the objectives in— when it comes to inventory, is to maintain high levels of customer service, keeping stable lead times while minimizing inventory obsolescence. You know, our strategy and our portfolios is such that it’s long-lived, with a very diverse customer base. So the risk of obsolescence is very low. So that’s a part of the equation.

And the other part is the upside that we get by having that inventory, both short-term and long-term, to support customers. So that’s why we’re comfortable holding higher levels of inventory. I’ve been talking about from current levels, we could add a billion to \$2 billion of additional inventory. And the timing, that all depends on revenue trends. So if they’re higher, then it’ll take longer. If those serving trends are a little weaker, then it’ll be a little faster to get there.

At first glance, Lizardi seems to be painting a picture of chips on shelves to support customers. (Imagine! Two billion dollars worth of inventory, sitting there ready to be sold! Where is this undisclosed warehouse, and how big is it?) But then he says this:

On the mix is a number of angles on that, chip stock versus finished goods, we have a mix of both of those. In some cases, it makes sense to have more of one than the other, but they’re both very low risk. So that’s how we think about it.

What? Chip stock? Huh?

The catch is that a big portion of inventory isn’t chips sitting on a shelf. It’s anything tangible that is expected to be a source of revenue but hasn’t been sold yet. So all those wafers in the fab that haven’t been completed: they still count as inventory.

Renesas was the one major automotive/industrial semiconductor manufacturer that didn’t report days of inventory consistently in a numeric form that I could add to my graph. But I did find a recent presentation from Renesas that points out the different components of inventory, from an accounting perspective:

- Raw materials

- Work-in-process (WIP)

- Finished goods

In general terms, products that are in a partially-complete stage in a manufacturing line are known as work in process or WIP; they start as raw materials, and when they are completed, they are known as finished goods.

The graph on the right shows very clearly that the majority component of inventory at Renesas has been WIP.

In reality it’s a little more complicated. At a high level, semiconductor manufacturing looks like the diagram below. Processes are rectangles and inventory locations are circles.

- Raw materials including blank wafers go into a fab to produce finished wafers

- Finished wafers go through wafer probe (usually in the fab) for each die to be tested with special probes that make contact to pads on the die. Each wafer has an ID, typically scribed on the wafer margin, and the results of testing each die go into a database to form a “wafer map” that keeps track of which die have passed probe and which have failed. In the old days, the method of keeping track was decidedly more low-tech: the die that failed test were marked with a dot of ink.

- Wafers that have been probed go into a die bank. This is a storage facility which may be in the fab itself, or in the assembly facility, or in an external third-party location.

- To produce finished goods (packaged ICs ready to be sold), the wafer is singulated into individual die, which are assembled into a packaged IC and tested. (Failed die that are identified in the wafer map are discarded prior to assembly.)

- Wafer fab and probe comprise the front-end part of the manufacturing process; assembly and test comprise the back-end.

- In some cases, wafers are stored in a wafer bank part-way through the wafer fab process.

What are wafer bank and die bank for? Pericom Semiconductor’s 2013 Annual Report put it this way:

We closely integrate our manufacturing strategy with our focus on customer needs. Central to this strategy is our ability to support high-volume shipment requirements at low cost. We design products so that we may manufacture many different ICs from a single partially processed wafer. Accordingly we keep inventory in the form of wafer bank, from which wafers can be completed to produce a variety of specific ICs in as little as five weeks. This approach has enabled us to reduce our overall work-in-process inventory while providing increased availability to produce a variety of finished products. In addition we keep some inventory in the form of die bank, which can become finished product in three weeks or less.

Die bank is more common than wafer bank, but the idea is the same: one design produces multiple finished goods, and the partially-completed wafer or fully-completed wafer can be stored at the point in the manufacturing process right before that single design diverges into multiple variations. For wafer bank, this would probably be done before the last metal layer or layers, allowing some flexibility in the final manufactured wafer while minimizing time to completion. For die bank, the variation is accomplished during the assembly and test steps: different packaging options can be used, or silicon fuses can be programmed. This simplifies the manufacturing process by deferring the need to specify exactly which product will be manufactured until right before it is sold, so that manufacturers can avoid keeping excess inventory in finished goods. Imagine that one microcontroller has 20 different variant part numbers. (This is fairly common: the one-time costs of product design and manufacturing are so high that multiple variants are usually envisioned to support different package options, temperature ranges, or even memory sizes with a single IC design and mask set.) Without die bank, if the demand for each variant is uncertain, the manufacturer might need to produce excess of some variants to ensure there is stock available. If an order for a million pieces of variant 14 is canceled, and someone needs variant 5 instead, there is no way to convert packaged parts from one variant to another. On the other hand, if a cancellation occurs before assembly and test, then the inventory in die bank can be repurposed for a different customer order.

Die bank is also much closer to the end product, and holding inventory in die bank is much quicker to deliver finished goods than starting from the beginning of the manufacturing process.

There’s also two very tangible cost advantages of holding inventory in die bank.

One cost savings is that the packaging costs don’t need to be spent until a wafer is processed in back-end. The other is the carrying cost of maintaining inventory: imagine a full 200mm wafer of LM358 op-amps. The bare die is only about one square millimeter. The full wafer is about 31000 square millimeters; even with only 67% yield including area lost in the scribe lines, that wafer would yield about 21000 good die. If these were to be assembled as SO-8 parts, the volume of each part is much higher; those 21000 parts would end up in seven reels of 3000 parts each… and the same wafers would need to be split up among different packaging types and temperature ratings, all organized and tracked. Or the wafer could simply be stored in die bank, in a more compact form, all ready to go when needed.

The CFO of Analog Devices put it this way in an August 2022 earnings call:

Die bank is an extremely cost-efficient place for us to hold inventory, particularly when you have 75,000 SKUs. You can hold it— sort of think of it as ten cents on the dollar. So it is very economically efficient and allows us to improve customer satisfaction later on.

Here’s a chart from NXP’s Corporate Business Continuity Update showing some of these different stages of inventory, including die bank:

Inventory is strategically allocated at different points in the manufacturing cycle to help buffer against transient mismatches in supply and demand. For long-lived industrial/automotive products, die bank is where much of the inventory should reside, like water in a primary reservoir. (Short-lived consumer ICs or DRAM that may only have a few years’ potential market is a different story, and holding on to excess content is risky.) Semiconductor manufacturers generally do break down inventory into raw materials / WIP / finished goods, but don’t disclose how much WIP is in die bank or in the front-end or back-end section of the manufacturing line.

Although having extra inventory is helpful when there are surges in demand, there are some major downsides of having too much inventory:

- If it’s not used before it goes obsolete, those parts get scrapped and realize no revenue for the company

- Cost is incurred to organize, maintain, and secure inventory

- The cost of the excess inventory itself could have been deployed elsewhere. A company maintaining \$600M worth of inventory instead of \$500M worth of inventory is using \$100M of funds that could be going into research and development, or sales and marketing, or capital expenses.

Determining the right level of inventory and the right level of production in the presence of all these issues is a huge challenge. It gets worse when we look at the larger supply chain rather than just within a given semiconductor manufacturer, and we’ll see this next time in Part Six.

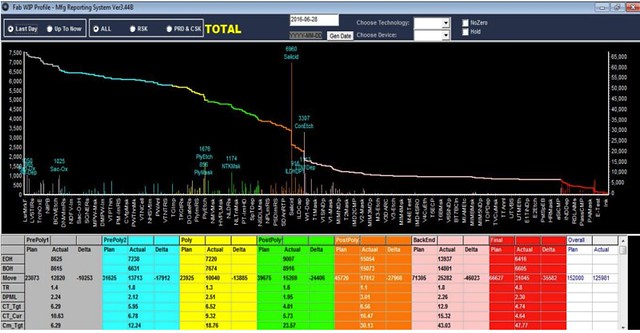

But I want to come back to the topic of cycle time. Look closely at that NXP chart, showing that time in the fab takes up the majority of the manufacturing cycle, 14 weeks out of 21 in this illustration.

14 weeks?!?!?!!!

Reality Check

Before reading this article, did you have any idea how long it takes to make a finished wafer?

Until a couple of years ago, I didn’t — and I’ve been working in the semiconductor industry for 11 years now! I’m not sure what kind of timescale I had in mind then, maybe a few days?

In February 2022, I put up a highly unscientific poll on Reddit’s /r/ECE (Electrical and Computer Engineering) with these responses:

| Time | Votes |

|---|---|

| 1 day | 18 |

| 3 days | 35 |

| 9 days | 56 |

| 1 month | 63 |

| 3 months | 61 |

| 9 months | 54 |

Quite the spread in answers. So apparently there’s a very unclear perception of how long fabs take to make chips.

The real answer is that it depends, but 1-5 months is probably a good wide-ranging guideline, with simpler processes (analog/power) taking less time, and advanced processes (sub-28nm leading edge) generally taking more time. Here’s what the Semiconductor Industry Association said in February 2021:[2]

Unfortunately, increasing semiconductor capacity utilization takes time, because semiconductors are incredibly complex to produce. Making a chip is one of the most, if not the most, capital- and R&D-intensive manufacturing process on earth. The fabrication is intricate and requires highly specialized inputs and equipment to achieve the needed precision at miniature scale. There can be up to 1,400 process steps (depending on the complexity of the process) in the overall manufacturing of just the semiconductor wafers alone. And each process step typically involves the use of a variety of highly sophisticated tools and machines. In short, making semiconductors is exceedingly hard and, therefore, takes time.

How much time? Manufacturing a finished chip for a customer can take up to 26 weeks. Here’s why: manufacturing a finished semiconductor wafer, known as the cycle time, takes about 12 weeks on average but can take up to 14-20 weeks for advanced processes. To perfect the fabrication process of a chip to ramp-up production yields and volumes takes even much more time — around 24 weeks.

Then, once the fabrication process is complete, the semiconductors on the silicon wafer need to go through yet another stage of production known as back-end assembly, test, and package (ATP), before the chips are final and ready for delivery to the end customer. ATP can take an additional 6 weeks to complete. Therefore, the lead time, which is from when a customer places an order to receiving the final product, can take up to a total of 26 weeks.

This probably skews the answer towards more advanced processes, though. (And it makes a very important error, which has been very apparent in the last two years: this article cites 26 weeks of manufacturing from a blank wafer to a finished chip, but that is the cycle time, not the lead time. Lead time is, as stated, the time from when a customer places an order to receiving a final product, but it can be very different from the cycle time. More on that in Part Six.)

A better answer states the cycle time as a function of the number of photomask layers, typically 1-2 days per mask layer (DPML). Semiconductor Engineering interviewed Robert Leachman of UC Berkeley in 2017:[3]

Generally, the most common metric for cycle time in the fab is “days per mask layer.” On average, a fab takes 1 to 1.5 days to process a layer. The best fabs are down to 0.8 days, Leachman said.

A 28nm device has 40 to 50 mask layers. In comparison, a 14nm/10nm device has 60 layers, with 7nm expected to jump to 80 to 85. 5nm could have 100 layers. So, using today’s lithographic techniques, the cycle times are increasing from roughly 40 days at 28nm, to 60 days at 14nm/10nm, to 80 to 85 days at 7nm. 5nm may extend to 100 days using today’s techniques, without extreme ultraviolet (EUV) lithography.

Estimates of DPML vary slightly. Leachman’s estimate of 1 to 1.5 days per layer seems slightly optimistic; UC Berkeley’s Competitive Semiconductor Manufacturing (CSM) Program reported a performance benchmark of 1.4 days cycle time per layer at full volume[4] in 2000. CSM’s 2002 report on benchmarking eight-inch sub-350nm fabs reported 1.4 to 2.8 days per layer for five industry fabs during 1999 and 2000, with a general downward trend over the 1995-2000 range.[5] Dr. Alvin Loke (now at NXP, then at Qualcomm) stated 1.5 - 2 days per layer in a 2019 presentation.[6] GlobalFoundries’ CTO mentioned 1.5 days of cycle time per mask layer in January 2017.[7] A paper by some engineers at SilTerra Malaysia in 2016 stated:[8]

In 2000, ITRS published the cycle time roadmap for semiconductor fabrication in Factory Integration section for 200mm wafers. The target given for normal production lot was 1.8 DPML for 180 nanometer (nm) technology node. Thereafter, ITRS guidelines no longer publish 200mm technology node roadmap. ITRS shifted focus to 300mm wafers instead and newer technology at 130nm and beyond for new cycle time target. 1.5 DPML was reported in the ITRS 2011 update. 1.3 DPML was reported in Integrated Circuit Economics 2010 Edition as the best cycle time for normal product for 300mm and 2.5 DPML as average.

In year 2010, actual survey claimed by IC Knowledge shows 300mm wafer fabrication performance is at 2.5 DPML.

This number varies by fab, and changes over time within each fab dependent on numerous criteria — but let’s stick with the 1-2 days per mask layer range, with the upper range being more likely.

The reason for using DPML as a metric is that IC manufacturing is very repetitive, going through very similar steps over and over again for each layer. I covered the basic fab process a bit in Part Two; each mask requires essentially the same steps, with minor variations, not necessarily in the order listed below:[9][10]

- cleaning and polishing the wafer

- deposition / ion implantation / diffusion — applying something onto the surface of the wafer to affect electrical properties: metals, silicon, oxides, or dopants. This may involve heating up the wafer in a furnace or may occur after photoresist is selectively removed — see later steps.

- applying photoresist

- exposure — shining ultraviolet light through the mask onto the wafer, to harden selective areas of the photoresist — often referred to as “lithography”, though strictly speaking, “lithography” or “photolithography” describes the whole process

- etch — removing parts of the top layer

- strip — removal of photoresist

- annealing — the wafer is heated in a furnace to mess around with the chemical structure, allowing crystals to “relax” and lower crystal stress or otherwise undergo some sort of chemical reaction. (One of these days I will be able to state what annealing accomplishes, without having to handwave my way through the conversation.)

- metrology and inspection — making sure that the wafer was modified as intended, within appropriate tolerance limits

The ordering is tricky. You’ll see abstract diagrams, like this one, in various journal articles on semiconductor manufacturing, that make me think of some kind of Kafkaesque game where you are stuck going around in circles trying to get free:

Abstract process flow of typical semiconductor manufacturing, redrawn from Hsieh & Hsieh[11]

It’s not a repeated linear progression through the same steps. This is easier to see if you were to look at a real process flow. The best I can do is to show the steps in one of the CMOS processes mentioned in the MIMAC project test data.[12] This was a SEMATECH study published in 1995, which included datasets taken from manufacturing data in production fabs:

In a separate effort, SEMATECH assembled several factory-level datasets. European data was added and validated under the MIMAC project. The purpose of collecting the datasets was to aid academics and suppliers in developing new models and tools for industry. The datasets contain actual manufacturing data from both ASIC and logic wafer fabrication facilities, organized into a standard format. They include no real product names, company names, or other nomenclature that could serve to identify the source of the data. Each dataset contains the minimum information necessary to model a factory, including product routings and processing times, rework routings, equipment availability, operator availability, and product starts.

Each MIMAC dataset includes a spreadsheet listing the process steps used for each of several sample products. This is not a complete recipe (“Add two cups of gallium phosphide. Heat to 925 °C and stir briskly....”) but it does give a short name for each step, along with a few timing details required to run a factory simulation. For the most part, the names and details are enough to figure out what category of tools are used in each step. In the figure below, I have drawn the process flow for Product 1 in Set 4, a microprocessor of some sort:

Process flow of Product 1 in MIMAC Set 4. Steps numbered in order in circles. White circles represent steps utilizing common tool groups. Green circles represent a cleaning step. Purple circles represent other steps.

Product 1 utilized a very minimal CMOS process with nine mask layers including one metal layer, probably dating back to the late 1980s.[13] Each mask layer goes through the same process of coating photoresist, running through an aligner for the exposure step, developing and baking the photoresist, doing something else, and then going through a resist “strip” process (with piranha solution!) to remove the photoresist. But the other steps of the process vary with each layer. For instance, many of the so-called front-end-of-line (FEOL) steps, which construct the transistors, resistors, and capacitors, require ion implantation. The back-end-of-line (BEOL) steps to interconnect the integrated circuit elements do not use ion implantation: the “APS” layer (aluminum to polysilicon & silicon contact), “Alum” layer (aluminum metal traces), and “Silox” layer (silicon dioxide for passivation) involve mostly thin-film deposition, furnace, and etching steps.

As far as number of layers in typical fab processes, here are some examples to give you a flavor of how this has changed over time:

-

The MOS 6502 required seven masks in 1975: a minimalist approach to NMOS fabrication, with one metal layer. Time through the MOS Technology fab took somewhere between 2 and 8 weeks, working 24 hours a day, 5 days a week.[14]

-

The CMOS process from the MIMAC dataset, as mentioned above, required nine masks, with one metal layer.

-

Today’s leading-edge ICs have multiple metal layers and all sorts of complexity, so that their mask layer count gets up into the dozens. Leachman’s estimate of “a 28nm device has 40 to 50 mask layers” is one data point; another is from a 2016 article quoting a director at Samsung at 40 mask layers for 45nm/40nm:[15]

“We started to see the mask count at about 40 mask layers at 45nm/40nm,” said Kelvin Low, senior director of foundry marketing at Samsung. “That grew into 60 mask layers for the 14nm and 10nm node. If you push that without EUV, and stretch immersion into triple or quadruple patterning, we expect the mask count to go to about 80 to 85 at 7nm. In some cases, you could see 90 mask layers, depending on the area scaling that you are trying to target. We think maybe one or two companies can afford this technology. The masses probably cannot afford this technology.”

-

Intel’s 90nm – 32 nm processes saw a gradual increase in metal layer count:[16]

- 90nm (2003) — 7 metal layers

- 65nm (2005) — 8 metal layers

- 45nm (2007) — 9 metal layers (metal 9 much coarser, for power distribution)

- 32nm (2009) — 9 metal layers (metal 9 much coarser, for power distribution)

Top row: Scanning electron microscope (SEM) cross-section of MOSFET junctions; Middle row: SEM cross-section of interconnect; Bottom row: Die photomicrograph. From Bohr & Mistry, Intel’s Revolutionary 22 nm Transistor Technology[17] (Credit: Intel Corporation)

-

Intel’s 14nm process, used in its Broadwell architecture, included 13 metal layers,[18] the lower 12 of which can be seen in this cross-section:

Scanning electron microscope (SEM) cross-section of Broadwell IC, from Bernasconi and Magagnin,[19] CC BY-4.0.

-

Freescale (now part of NXP) MPC561 microcontroller (2004): 250nm process, 3 aluminum metal layers.[20]

-

Freescale ATMC C90FG process used in the MPC5674F (early 2010s): 90nm, 6 copper metal layers, 55 mask layers, 350 process steps.[20]

-

Infineon C11N process, 130nm CMOS, 4-6 metal layers, circa 2007.[21]

-

SilTerra’s CL110AL process, 110nm, up to 6 metal layers, circa 2013.[22]

-

ST Microelectronics CMOS M10 process, 90nm, 4-6 metal layers.[23]

-

TSMC 45nm: 9 metal layers (7 small-geometry and two large power distribution metal layers)[24]

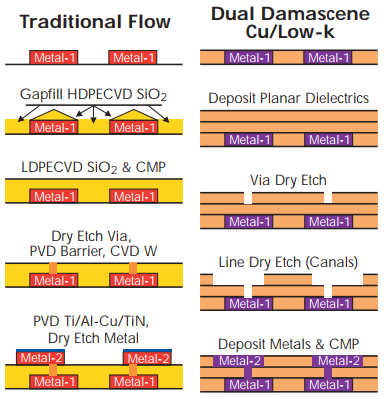

Metal layer count is important because each metal layer in modern fab processes requires at least two masks: one for vias to other layers and one for the metal itself. (You can see this in the Intel SEM images above, and in the “Metal layer manufacturing” diagram below.) It seems likely that most microcontrollers affected by the chip shortage (40nm – 250nm) would have between 3 and 9 metal layers, which therefore adds between 6 and 18 mask layers to whatever is required by the active and polysilicon layers (the FEOL steps) and a passivation layer at the end.

Metal layer manufacturing, from International SEMATECH 1999 Annual Report.

Smaller geometry processes (22nm FinFET and under, and maybe 28nm) start to require multiple patterning steps, which requires more masks and more process steps, and therefore increases cycle time — thankfully something that didn’t impact the “mature nodes”.

There are hundreds of steps (called “moves”) in a modern semiconductor fab. In addition to the sheer number of steps, there are some nuances that differentiate the kinds of processes that go on in a fab. Here’s an overview that I think helps give some flavor of those nuances:[25]

The semiconductor manufacturing process is performed in a clean environment known as a wafer fabrication facility or wafer fab. The steps required to manufacture a semiconductor product or device are described in a process flow or process routing; current generation semiconductor process flows can contain between 250 and 500 manufacturing or processing steps. Typically, the individual silicon wafers upon which semiconductors are manufactured are grouped into “lots” of 25 wafers. Each lot is uniquely identified in the wafer fab’s manufacturing execution system (MES) as a unit of production (job). Therefore, the individual wafers within a lot travel together throughout the manufacturing process.

The equipment set used to manufacture semiconductors is typically made up of 60 to 80 different equipment types. These equipment types contain a diverse array of wafer processing tools in terms of the quantity of wafers that can be processed concurrently. While single wafer tools (photolithography steppers) only process one wafer at a time, other tools (acid bath wet sinks) can process entire lots concurrently. Wafer fabs also contain batching tools (diffusion furnaces) that can process multiple lots of wafers simultaneously. Finally, some tools (ion implanters) are subject to sequence-dependent setup times, as the time required to setup the tool depends upon the previous lot that was processed on the tool.

Due to the production volumes required in today’s wafer fabs, these fabs contain multiple tools of each equipment type. This redundancy leads to the notion of a workstation or “tool group” made up of similar tools of a given equipment type that process wafers in parallel. Finally, semiconductor process flows contain a considerable amount of reentrant or recirculating flow, wherein a given tool may be visited a number of times during the manufacturing process by the same lot (job). This type of flow is necessitated by the capital cost of wafer processing equipment, which can cost up to \$7,000,000 per tool.

So: hundreds of steps, some are batch tools, some tools operate on single wafers, and they’re very expensive.

If you were paying close attention in Part Two, MOS Technology’s “019” process took 50 steps to complete the seven mask layers used in the 6502. Even that is a lot to take in, so to understand the general behavior of a fab, we’re going to take a detour from semiconductor manufacturing and look at a few simpler examples.

Freddy’s Forgery Factory

Freddy Flannery’s from Fresno.

Freddy found fame and fortune from art forgery. Freddy’s forté: forgotten works, famous Frenchmen. Cézanne, Degas, Gauguin, Renoir, Matisse, Monet… and finally—

Felony.

Five years, Folsom.

Freddy, freed but fatigued, found legitimacy in mass production. Freddy’s plan: a factory in Fresno, churning out first class facsimiles of famous works, framed.

The manufacturing process that Freddy had in mind — just imagine this running through Freddy’s head as he is falling asleep on his prison bunk — consisted of a few different machines connected by conveyor belts, roughly equivalent to the ones shown below:

Each machine loads canvases until it is full — one canvas for the single unit machines, several canvases for the batch machines — and then processes the canvases. When processing is done, it begins unloading; if there is no space on the unloading conveyor, the machine has to wait. (Continuous-process machines like the conveyor ovens don’t have a loading/unloading process, only a transport delay.)

For our purposes, the exact operations of these machines don’t matter; we just care about the timing of the products (canvases) as they travel along the manufacturing line. (Note: I am also not an art forger, so presumably machines like the ones illustrated here are unrealistic.) One major goal of manufacturing is to make sure there aren’t problems by keeping track of how the WIP is moving through the factory.

Here’s the whole factory shown in a more compact layout:

Freddy planned this very carefully, so he could manage both the throughput and latency of this process. The raw process time per painting is the sum of all these times, or 82 minutes. In Freddy’s factory, each machine (except for the conveyor oven) also takes a total of 30 seconds to load or unload each canvas, and the conveyor belts add a transport delay of 60 seconds for each step, when they are moving at full speed. By Freddy’s calculations, this adds another 12 minutes, for a total latency or cycle time through the factory of 94 minutes.

The throughput is dependent on the maximum rate of each step. In Freddy’s factory there are six equal bottlenecks of 5 minutes per painting:

- the two aging ovens take 18 minutes to process and 2 minutes to load/unload a batch of four paintings, for a total of 20 minutes = 5 minutes per painting

- the inkjet and brush machinery and the two inspection stations take 4.5 minutes to process + 30 seconds to load/unload = 5 minutes per painting

At 5 minutes per painting, that’s 12 paintings per hour. Freddy’s factory was designed to operate 24/7, so it should be capable of producing 288 paintings per day. Freddy fell asleep numerous times with visions of millions of dollars rolling in.

At least that’s what Freddy figured. The reality was a little different; Freddy made some serious mistakes in his calculations because he forgot to take into account some important limitations of manufacturing, namely yield loss, variability, queueing delays, and maintenance.

The on-line visualizations of Freddy’s factory don’t include yield loss — we won’t cover yield loss here; imagine that a defect is found during inspection, and the canvas can either be reworked or scrapped, with a decrease in production rate either way. But the other factors are there. If you wait a while, the storage pile at the end will show the cycle time of the most recently completed canvas:

123 minutes and 30 seconds?! That’s almost a half hour longer than Freddy’s 94 minute calculation. Where did the extra cycle time come from? (Hint: try reducing the “Canvas starts” slider to 8/hour, and look at cycle time as well as the flow of canvases through the factory. If you’re impatient, increase the simulation speed. I’ll reveal the answer later in the article.)

At any rate, let’s assume for the moment that 288 paintings per day is the factory capacity. The most serious error, perhaps, is that Freddy assumed demand was high enough to sell those 288 paintings per day. Over the long run, Freddy can’t produce more than he can sell, or he’ll end up with a growing number of unsold paintings in inventory, so he will likely be running at some lower rate, perhaps 144 paintings per day. The ratio of the actual production rate to factory capacity is the utilization of the factory: 144 paintings per day / 288 paintings per day = 50% utilization.

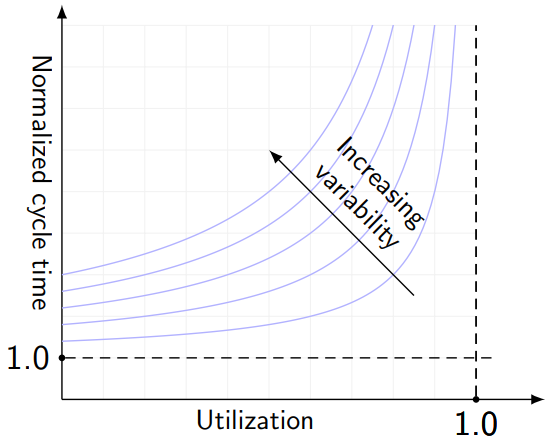

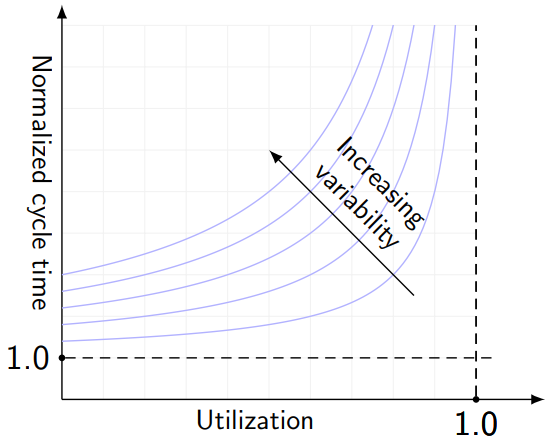

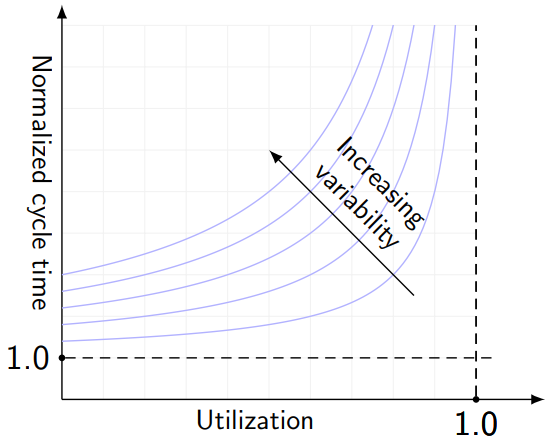

The cycle time of Freddy’s factory will change depend on the utilization; with everything else fixed, the cycle time is some function of utilization, called the operating curve. Operating curves look something like this:

This graph shows normalized cycle time, which is cycle time divided by its theoretical minimum, so that can never drop below 1. As the factory approaches 100% utilization, the cycle time zooms upwards, with a scaling factor that depends on variability in the factory.

Let’s look at another factory, and then I’ll talk about why these curves are the way they are.

Supply Chain Games 2022: Shapez

If you want a virtual factory experience that’s a little more engaging than Freddy’s Forgery Factory, I would suggest trying out Shapez, an in-browser game that has factory elements. (I’ve been told that I should try out Factorio, but from what I can tell it has an excess of evil addictive complexity, so I’ve avoided it so far.)

In Shapez, you are in a large grid with various deposits of shapes and colors. You start out next to a big squarish thing called The Hub, which demands you produce and deliver it various quantities of shapes.

To do this, you place Extractor machines onto one of the grid squares in a shape or color deposit, and it starts extracting the shape or color at a regular rate, initially 1 item every 2.5 seconds = 0.4 items/s. You can place conveyor belts to bring the extracted shapes to the Hub. There are other machines as well — cutting machines, rotators, balancers, stackers, painters, tunnels, etc. — which process the shapes as demanded by The Hub.

The game starts simple, but each time you produce enough of the target shape, it gets more complicated, and new machines are unlocked.

One of the challenges of the game is that not all the machines process at the same rate. If a machine doesn’t have one of the inputs it needs, the machine will wait. If an input arrives faster than it can process, the raw material will back up on the conveyor belts leading into the machine, and the belts leading out of the machine will have lots of empty space. These are signs of a local bottleneck: upstream inputs backing up, downstream output with empty space. In the image below, the cutting machines appear to be a bottleneck. (They are, for the most part. Taking a closer look, I see I haven’t divided up the semicircles equally among the painting machines. The lower conveyor belt runs through a balancer that splits up the content and diverts half of the semicircles onto a belt destined for the painting machine on the left, which gets the majority of of the semicircles, so the input belt that leads from the top cutter is backed up, waiting to sending semicircles through the tunnel and on into the painting machine. But the conveyor downstream from the painting machine is backed up also.)

One of the ways that you can compensate for unequal processing rates is to build repeated sections of machinery that each draw off part of the material from the conveyor belts, processing it in parallel with other sections. Here’s an example, showing three stackers that each grab a red circle from one conveyor belt and a green circle from another conveyor belt, stack them together, and put the resulting watermelon sections out through a tunnel onto a third belt.

At some point I realized there was extra space (note that only three out of every four grid rows are doing something), so I rebuilt it as part of a much larger ladder — with 12 stackers in parallel! — in a section of machinery constructed to deliver a 90-degree slice of watermelon to The Hub. In the big picture, the bottleneck is in a different area, containing cutting machines.

The bottleneck depends on the different processing rates — which, by the way, you can speed up by collecting enough shapes to upgrade your machinery.

Improving throughput in Shapez is all about finding bottlenecks, and adding more machinery to relieve those bottlenecks. Visual identification is usually pretty easy, but sometimes it helps to analyze numerically. Below is a snapshot of one section of machinery with three painter machines in parallel at 0.33/s, for an aggregate capacity of 0.99/s, and two cutter machines in parallel at 0.75/s each, for an aggregate capacity of 1.5/s. At the time I was playing, the conveyors and tunnels could handle 3 shapes per second.

So I could triple the number of painter machines and double the number of cutter machines to reach that 3 shapes per second throughput:

It’s easy to see when you get to this point: the conveyor belts are all full of work in process, with no space in between.

I had to fix a couple of things, though, that I had missed on first glance:

- the extractors are limited to 0.8/s, so I need four of them at the blue and square deposits to keep up with the demand of 3 shapes per second

- cutters output twice as many shapes as their input, so that produces 6 shapes per second, which I need two conveyor belts to carry.

But I did it! Now the bottlenecks are the conveyors and painters and cutters, all operating with a net throughput of 3 shapes per second in, 6 blue rectangles per second out. Like we saw in Supply Chain Idle in Part Three, the optimum factory design is when we balance the production capacity until we get 100% utilization on as many machines as possible.

Or is it?

The Missing Ingredient is Variability

That’s a trick question, because both Supply Chain Idle and Shapez are not like the real world. Both games — as well as Freddy’s ideal vision of a factory — are perfectly happy to run like clockwork. Chances are, if you’ve seen any video footage of a factory, it’s a smooth, hypnotic, fast-paced flow of stuff, like this beer bottling plant:

Look at that, zoom zoom zoom, everything is perfect, poetry in motion.

Some factories are like the beer bottling plant. But some are not, and the difference is all in how material flows through the factory. Smooth flows of material through the factory are known as synchronous. Imagine for a moment that there are 240,000 people drinking beer in some region of the world, and each of them drinks two bottles a day. The local beer bottling plant produces 480,000 bottles per day, packing them 24 to a case, 20,000 cases a day, sent to nearby stores and restaurants. Everything is balanced, with all the steps in the bottling plant running at the same rate, producing exactly enough to match the demand of one bottle every 0.18 seconds — this is known as the takt time — and everyone is happy.

James Ignizio puts it this way:[26]

The basis for the belief that a factory should employ a balanced line running at takt speed is a consequence of a narrow focus on synchronous factories. An ultimate example of a synchronous factory might be that of a soft-drink bottling plant. In such a plant, the flow of each job (i.e., each bottle) is synchronized with every other job. There is no extra room on the conveyor belt connecting one workstation to another, so balance and synchronization are essential.

Automobile assembly lines, while not necessarily strictly synchronous, are very close to being synchronized. In a moving automobile assembly line or a perfectly synchronized line, a balanced line makes sense. But this does not hold for asynchronous factories such as semiconductor wafer fabrication facilities.

What may not be obvious is how difficult it is to achieve synchronous flows in a manufacturing plant, or the disadvantages of trying to run synchronous flows, or what happens when flows are not synchronous, or why some manufacturing processes are naturally synchronous and some are not.

(There’s some really deep thoughts lurking here, by the way. I think I understand the situation enough to provide an explanation for some of those topics, but realize that there have been major differences of opinion even among manufacturing professionals about the optimal way to run different types of factories, so don’t treat what I have to say as the gospel truth.)

The key feature of an ideal synchronous manufacturing plant is that the cycle time is purely dependent on processing steps and the transport time to get through the plant. There is never any wasted time: the beer bottles in the plant are not waiting for machinery, and the machinery is never waiting for beer bottles. Either of those cases represents lost opportunity. If the machines wait for more products to arrive, then they aren’t fully utilized. If the products are waiting for machines, then cycle time is longer than necessary, and the factory needs space for those products to wait.

In a sense, the synchronous manufacturing plant represents perfection, and any deviation from that synchronous flow reduces the plant’s ability to keep cycle time to its theoretical minimum.

There are common real-world situations that have some similarity to the challenges of the synchronous flow in a factory; one of them is highway traffic. In heavy traffic, smooth vehicle flow is impaired, and both of the following tend to happen:

- Traffic density is high enough that vehicles are forced to slow down and wait to proceed

- Traffic density is lower in other places, and represents a lost opportunity; if only the flow of traffic were more uniform, then there would be fewer slowdowns.

The first of these is familiar to us, as a traffic jam; the second may not be. Both are visible in this picture:

One alternative to irregular vehicle flow in highway traffic should also be familiar to us: consider a freight train, with each car connected to the next, all traveling synchronously together. The freedom of each car on a highway to travel at its own speed — as long as it doesn’t run into the vehicle in front — allows variability, and we get traffic jams when there are enough cars on the road.

Keep that thought in the back of your mind. We’re going to look at another example to understand the effect of variability in a factory a little more clearly. Variability can put us in dire straits. Which reminds me, we need some music.

Notes

[1] Seeking Alpha’s transcript of TI’s earnings call for Q4 2022 misattributes this response to Dave Pahl. [2] Semiconductor Industry Association, Chipmakers Are Ramping Up Production to Address Semiconductor Shortage. Here’s Why that Takes Time, Feb 26 2021. [3] Mark LaPedus, Battling Fab Cycle Times, Semiconductor Engineering, Feb 16 2017. [4] Robert C. Leachman and David A. Hodges, Competitive Semiconductor Manufacturing: Program Update, University of California at Berkeley, Jul 8 2000. [5] Robert C. Leachman, Competitive Semiconductor Manufacturing: Final Report on Findings from Benchmarking Eight-inch, sub-350nm Wafer Fabrication Lines (CSM-52), University of California at Berkeley, Mar 31 2002. The cycle time per layer measurements are graphed on page 49. This report covers ten industry fabs, anonymized as “M1” through “M10”, but included reported data in the 1999 and 2000 range from only five of them. An earlier report, CSM-31, published in 1996, covered a wider range of fabs (including AMD, Cypress, Intel, IBM, Lucent, Motorola, National Semiconductor, Samsung, TSMC, Texas Instruments, Toshiba, and UMC) measured over the 1992–1995 timeframe. This report included cycle time data from CMOS logic fabs (1.8 – 3.3 days per layer) and Medium Scale Integration fabs (analog circuits and power devices; 1.2 – 3.7 days per layer). [6] Alvin Loke, IEEE CICC2019 ES2-2: Nanoscale CMOS Implications on Analog/Mixed-Signal Design, IEEE Custom Integrated Circuits Conference, Apr 2019, posted on YouTube Dec 18 2019. Unfortunately the video was removed, and I can’t find any written records with the 1.5 - 2 DPML mentioned. Slides are still available; Dr. Loke mentioned cycle times on slide 40. [7] David Lammers, Innovations at 7nm to Keep Moore’s Law alive, Solid State Technology, Jan/Feb 2017. The article cites a statement by GlobalFoundries’ CTO Gary Patton, speaking at the SEMI Industry Strategy Symposium in January 2017. [8] Kader Ibrahim, Mohd Azizi, and Uda Hashim, Semiconductor Fabrication Strategy for Cycle Time and Capacity Optimization: Past and Present, Proceedings — International Conference on Industrial Engineering and Operations Management, Mar 8-10, 2016. [9] Jessica Timings, Six Crucial Steps in Semiconductor Manufacturing, ASML, Oct 6 2021. [10] Microcontroller Division Applications, AN900: Introduction to Semiconductor Technology, STMicroelectronics, 2000. [11] Liam Y. Hsieh and Tsung-Ju Hsieh, A Throughput Management System for Semiconductor Wafer Fabrication Facilities: Design, Systems and Implementation, Processes, Feb 11 2018. Article published under a Creative Commons Attribution (CC BY) license. [12] John Fowler and Jennifer Robinson, Measurement and Improvement of Manufacturing Capacity (MIMAC)

Designed Experiment Report, Jul 20 1995. Datasets available online at FernUniversität in Hagen website. [13] NMOS microprocessors dominated until the mid-1980s; CMOS microprocessors of this era were the Western Design Center 65C02 (1983), Intel 80C51 (1983), Motorola 68HC11 (1984), Motorola 68000 (1985), and Intel 80386 (1985). The single metal layer of MIMAC Microprocessor Product 1, and the fact that it was still in production in the early 1990s may imply some kind of low-cost long-lifetime microcontrolller. (Intel’s CHMOS-III process used in the 80386 was a 1.5-micron process with two metal layers, according to the company’s “Introduction to the 80386” databook.) [14] I got a couple of different answers from various staff members at the MOS Technology plant in Pennsylvania during the NMOS days (1970s - early 1980s). Bil Herd, designer of the Commodore 128, who worked at MOS from 1982 – 1986 remembered “about a month for a full run”. (pers. comm. Mar 16 2022) Herd spoke at Vintage Computer Festival Midwest 11 in September 2016, recounting that “it’d take another quarter million dollars and three or four weeks to spin the chip to do another rev of it.” Bill Barnhill, who worked in the fab on equipment maintenance and process development from 1973 – 1986, remembered “a standard batch of wafers took between two to three weeks to go through the fabrication area. Special runs could be made in 10-14 days but that was mainly for new improvements or changes to the process.” (pers. comm. Jul 15 2023) MOS/Commodore ran the fab 24 hours a day, 5 days a week, with maintenance “performed on the weekend to eliminate downtime”. (pers comm. May 18 2022) Albert Charpentier, lead designer of the Commodore 64 chipset, who worked at MOS from 1975 – 1982, remembered 6-8 weeks as the standard fab manufacturing turn time, with 2-3 weeks under expedited circumstances. According to Charpentier, the Commodore/MOS fab “turned the VIC-II R1 in about a little more than a week to make January 1982 CES.” (pers. comm. Jul 16 2023) A one-week turnaround through wafer fab is unheard of these days, due to process complexity; even then, with a 7-layer NMOS process, and downtime on weekends, this would have been less than a day per mask layer. A 1985 IEEE Spectrum article (Tekla S. Perry and Paul Wallich, Creating the Commodore 64: The Engineers’ Story, Mar 1 1985) states: David A. Ziembicki, then a production engineer at Commodore, recalls that typical fabrication times were a few weeks and that in an emergency the captive fabrication facility could turn designs around in as little as four days. There would likely have been some variation in cycle time as the business cycle progressed and led to fluctuations in MOS/Commodore’s fab utilization. [15] Mark LaPedus, Mask Maker Worries Grow, Semiconductor Engineering, Aug 18 2016. [16] Intel IEDM papers for the 90 – 32 nanometer range: [17] Mark Bohr and Kaizad Mistry, Intel’s Revolutionary 22 nm Transistor Technology, Intel Newsroom slide presentation, May 2011. [18] Sanjay Natarajan et al., A 14nm Logic Technology Featuring 2nd-Generation FinFET , Air-GappedInterconnects, Self-Aligned Double Patterning and a 0.0588 m2 SRAM cell size, 2014. Intel publishes details on its processes in papers submitted to the IEDM, and usually includes dimensions of the metal layers, but didn’t put much detail for the 14nm process. [19] Roberto Bernasconi and Luca Magagnin, Review—Ruthenium as Diffusion Barrier Layer in Electronic Interconnects: Current Literature with a Focus on Electrochemical Deposition Methods, Journal of The Electrochemical Society, Dec 10 2018. [20] John Cotner, Semiconductor 101: Functionality and Manufacturing of Integrated Circuits, Freescale Semiconductor, Sep 2013. What a great set of slides! Includes several dozen just on wafer fab processes. [21] Infineon, C11N 130nm CMOS Platform Technology, publication date Sep 2007. [22] SilTerra website, https://www.silterra.com/technology/. See also SilTerra slide deck, Ibero-America IC Design Contest, Apr 25 2013, slide 22: CL110AL listed as available Q1/Q2 of 2013. [23] STMicroelectronics, Product Change Notification PCN APG-MID/13/8204, Nov 5 2013. [24] Kuan-Lun Cheng et al., A highly scaled, high performance 45 nm bulk logic CMOS technology with 0.242 μm2 SRAM cell, 2007 IEEE International Electron Devices Meeting, Dec 2007. (“7+2M BEOL process” shown in Fig 12.) [25] Scott J. Mason and John W. Fowler, Maximizing Delivery Performance in Semiconductor Wafer Fabrication Facilities, Proceedings of the 2000 Winter Simulation Conference. [26] James P. Ignizio, Optimizing Factory Performance, 2009.

Sad Fish Bank

We’re going to take a look at a very simple queuing example: La Banque du Poisson Maussade, a hypothetical bank located somewhere in Europe, quite possibly in Belgium. This bank is very efficient. It is open 24 hours a day, 7 days a week, with no holidays. It has one entrance door, one exit door, and one teller, who can process requests instantly. Oh, and there is space inside the bank for about 50 people to wait in line, with a much larger secondary waiting area, which may make you suspicious that there is a catch.

There are three catches, actually:

- The bank only handles deposits and withdrawals.

- Yes, the teller processes requests instantly, but at random.

- As long as there is room, the entrance door lets people in at random.

At random? What does that mean, exactly? And why does it matter?

Random Processes: Radioactive Decay and Telephone Calls

Any one who considers arithmetical methods of producing random digits is, of course, in a state of sin.

— John von Neumann, Symposium on the Monte Carlo Method, 1949

The entrance doors and teller are examples of random processes, meaning something that happens unpredictably. But many random processes can be characterized statistically. In this bank, the entrance and teller are each modeled by an average rate of events.

(Warning! This section contains mathematics! I’ll try to keep it brief and simple. If you get overloaded by the math, either take a deep breath and ignore it while you keep reading, or just skip ahead until you see the section Math takeaways.)

For example, the arrival rate \( \lambda \) (lambda) characterizes entrance events: in any given small interval of time \( \Delta t \), the probability that someone walks in the entrance is \( \lambda \Delta t \). If \( \lambda = 0.01/s \), then during each second, there is a one-in-a-hundred chance that someone will walk through the door; during each millisecond, there is a one-in-a-hundred-thousand chance that someone will walk through the door. There is always the possibility that as you turn and look at the door, one person walks through the door during the first millisecond and another person walks through the door less than a millisecond after the first. This would happen infrequently (about one in ten billion each millisecond, according to this model, which would happen on average about once every four months) and is not very realistic — in real life, it would take a couple of seconds for one person to pass through a doorway and allow the next person to enter. But in our model, that doesn’t occur: each entry event is instantaneous and independent. The time between entry events, called the interarrival time, has a mean value of \( 1/\lambda \), so with \( \lambda = 0.01/s \), the average time between people entering the bank is 100 seconds. The interarrival times are random, and happen to follow an exponential distribution.

The teller also processes customers at a certain rate \( \mu \), with the same characteristics: within any given small time interval, if there is a customer waiting for the teller, the probability that the teller will finish processing the customer’s request is \( \mu \Delta t \).

This kind of system is called an M/M/1 queue; the M stands for Markovian — all events in both arrival and servicing are independent of past history, also called a Poisson process — and the 1 means there is a single server, sometimes indicated as shown in the diagram below:

The behavior of a queue is the subject of the field of queueing theory, which was first formalized by A. K. Erlang in 1909. Erlang published a paper while working at the Copenhagen Telephone Company to analyze the mathematical properties of telephone traffic. But man-made inventions are not the only things that can be characterized using queueing theory. Events characterized as a Poisson process with some rate \( \lambda \) are fairly common in the real world, in any situation where these events are part of a recurring process and there are no reasons for them to occur at any particular time relative to other events: radioactive decay, rainfall, shot noise in electric currents — in addition to telephone network traffic. These are all good examples of arrival events.

The modeling of a bank teller’s service times by a Poisson process, on the other hand, is kind of odd — but let’s stick with it for now.

The math behind an M/M/1 queue is fairly simple, and we can draw it as a Markov chain with each state denoting the number of customers inside the bank:

The arrows between states show the transition rates: customers arrive at rate \( \lambda \), increasing the state by one each time a customer arrives, and customers depart at rate \( \mu \), decreasing the state by one each time a customer is serviced at the teller.

The interesting part of queue analysis starts to happen when you answer questions about the behavior of the queue. For example, if I walk into the bank as a customer, how long is my expected wait and how many customers are likely to be ahead of me?

The M/M/1 queue has a steady-state probability distribution in each state \( k \) of \( P[k] = (1-\rho)\rho^k \) where \( \rho = \lambda/\mu \) is the utilization of the queue. For example, if \( \lambda = \) 2 customers per minute and \( \mu = \) 3 customers per minute, then \( \rho = \) 2/3, and there’s a 1/3 chance of zero customers in the bank, 2/9 chance of one customer in the bank, 4/27 chance of two customers in the bank, 8/81 chance of three customers in the bank, and so on. The probability that there are at least \( k_0 \) customers in the bank (in other words, \( k \ge k_0 \)) is \( \rho^{k_0} \). This means there might be at least 50 customers waiting in line! The probability of this happening at any given instant is really small; for \( \lambda = 2, \mu = 3 \), this probability would be \( (2/3)^{50} \approx 1.568 \times 10^{-9} \), or a little more than one in a billion. But you can’t rule it out!

So how large should the bank’s waiting area be? That’s much harder to answer. In this example, at first glance, if we want the probability for the queue to overflow to be less than one in a billion, then it seems like room for 50 customers should be adequate: 50 customers fit, 51 do not, and the probability of at least 51 customers is \( (2/3)^{51} \approx 1.046 \times 10^{-9} \). But that’s the probability of this occurring at any given instant that we choose to look at the bank. If we restated that we wanted the probability of the queue overflowing at least once during an entire year of operation to be approximately 1%, it would require a queue capacity of 40. (The probability of overflow in this case is approximately \( 1-e^{-Lt} \) where \( t= \)1 year ≈ 526000 minutes and \( L = \lambda(1-\rho)^2\rho^{40} \approx 2.0097\times 10^{-8} \)/minute, leading to an overflow probability of about 0.0105. Real-world situations would probably just handle the situation by the natural distaste of customers deciding not to enter the bank if the line is too long.)

As for my original questions, with \( \lambda = \) 2 customers per minute and \( \mu = \) 3 customers per minute:

- How long is my expected wait? The expected wait time (time in the bank before I get to the teller) is \( 1/(\mu - \lambda) - 1/\mu = \) 40 seconds, and the expected service time after getting to the teller is \( 1/\mu \) = 20 seconds, for an expected total time of \( 1/(\mu-\lambda) = \) 60 seconds.

- How many customers are likely to be ahead of me? The expected number of customers in line when I arrive is \( \sum\limits_{k=0}^{\infty}kP[k] = \frac{\rho}{1-\rho} \) which is (2/3)/(1/3) = 2.

The derivation of these is probably a good exercise for math students, but too much of a distraction here, so I’ll just refer to Wikipedia.

Why do we care about the random aspect of arrival and service times in queues? The main reason is that it causes temporary mismatches between the entrance and exit rates of the queue. During some periods of time, we will have more items entering than exiting the queue, and the queue length will increase. In other periods of time, the server will process queue items faster, and the queue length will decrease. And when the queue becomes empty, it represents lost opportunity: the server must sit idle, waiting for something to do.

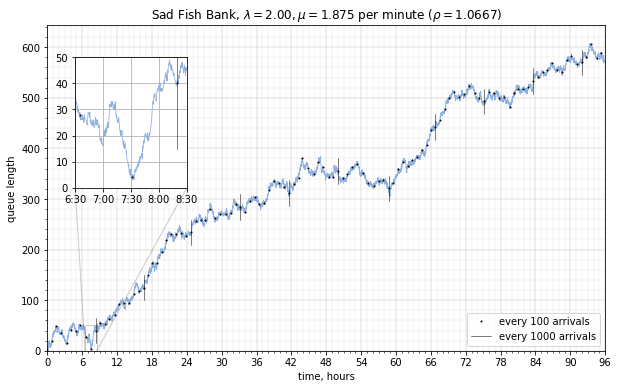

Weird things happen when the utilization \( \rho=\lambda/\mu \) approaches 1 or even exceeds it… but rather than trot out some equations, let’s see our bank in action!

At La Banque du Poisson Maussade, the teller’s service rate is \( \mu = \) 2/minute — an average service time of 30 seconds — and there’s a 2-second “walk-up time” for the customers to step up to the teller, for an effective net service rate of \( \mu’ = 1/(32 \mathrm{s}) = \) 1.875/minute. This gives a utilization of \( \rho = 1.0667 \)… greater than one… yikes! If you crank up the simulation speed, you’ll see the customers pile up, with a brief reprieve at around 7 hours where the customers are lucky enough that the teller catches up — but then after that the pile-up happens again in earnest.

If you want to see the case of \( \rho = 2/3 \), where the expected number of customers in line is 2, you’ll need to change the arrival rate \( \lambda \) down to 1.25/minute.

Over a long time, if \( \rho > 1 \) the pile-up or overflow rate is somewhat consistent; here’s a sample run of the first 96 hours of operation at \( \lambda = 2.0 \):

The overflow rate \( \lambda - \mu = \) 0.125/minute = 7.5 customers per hour, so after 96 hours we should expect around 720 customers, with some variability — in this sample run there is just under 600. This variability can be quantized: the standard deviation of queue length \( N(t) \) for \( \rho > 1 \) increases with time, approximately \( \sigma_N = \sqrt{(\lambda+\mu)t}, \) and at very large time scales, the effect of randomness becomes less significant compared to the mean. Over the course of 500 hours the queue length meanders around, staying mostly within one standard deviation of the mean.

If we’re planning a factory or a bank running for a long time, we need to take into account both effects: the mean values will dominate over the long term, but we can’t ignore the short-term random fluctuations or the worst-case behavior will overload our system.

Of course, the \( \rho > 1 \) case is not sustainable, so let’s look at some smaller values of \( \lambda \).

Below but near \( \rho = 1 \) is the case of heavy congestion; queue lengths can become long, and graphed over time look “turbulent”:

This turbulence comes from the fact that once the queue length does build up, it takes a long time to drain back down. For example, with \( \lambda=1.8 \) and \( \mu=1.875 \) per minute, long queues drain at a rate of \( \mu-\lambda=0.075 \) per minute, an average of 13.3 minutes per person. Most of the teller’s work goes towards keeping up with the arrival of new customers.

At lower utilization, the queue length waveforms become more steady-looking. There are still occasional bursts of arrivals that increase queue length, but they drain more quickly.

Expected Queue Length and Little’s Law

For an ideal M/M/1 queue, the expected queue length \( \bar{N} = \frac{\rho}{1-\rho} \); this yields \( \bar{N} = 2 \) for \( \rho = 2/3 \) and \( \bar{N} = 4 \) for \( \rho = 4/5 \). The graphs above for these utilization levels show a larger mean queue length from measured data — but that’s because in our bank, there is transport time: the customers take approximately \( w_T = \)121.5 seconds = 2.025 minutes to traverse the waiting area. (In Sad Fish Bank, customers walk slowly and dejectedly.) This adds an extra amount \( \lambda w_T \) to the expected queue length, an example of Little’s Law: expected number of customers in the queue is directly proportional to the expected time in the queue, by a factor of the arrival rate \( \lambda \).

So the expected number of customers inside Sad Fish Bank, theoretically speaking, is shown here in Equation 1:

$$ \bar{N} = \underbrace{\frac{\rho}{1-\rho}} _ {\text{waiting in line}} + \underbrace{\vphantom{\frac{\rho}{1-\rho}}\lambda w_T} _ {\text{transport time}} \tag{1} $$

One way of thinking about Little’s Law is that it expresses “conservation of stuff”.

If that is a bit too abstract, here is another example. One day I went to a revolving sushi restaurant and decided to see if I could figure out how many plates could fit on the conveyor belt.

I picked a recognizable spot on the belt, a small sign stating “Seweed Salad”, and measured how long the sign took to go around once. It took 9 minutes and 6 seconds, so the waiting time \( W = \) 546 seconds.

Then I waited for a bunch of plates to come along with no space between them, and timed how long it took 20 plates to pass by: 44.26 seconds, so the arrival rate \( \lambda = \) 20 / 44.26 s = 0.452 plates per second.

The number of plates on a full belt should therefore be \( \lambda W \) = 246.8, so my best estimate is 247 plates.

Little’s Law just applies the same idea to the case of a queue with probabilistic variation, where the expected count = expected rate × expected time.

Waiting Time Distribution, Dependency on Utilization, and X-Factor

The simulation of Sad Fish Bank lets us see how close theory comes to experiment. Theory (Equation 1) says the expected mean queue length is \( \bar{N} = \frac{\rho}{1-\rho}+\lambda w_T \).

If we measure the number of customers in the bank \( N(t) \) over a long period of time, we can calculate the average queue length. I did this for two different arrival rates:

| \(\lambda\) | \(\rho\) | \(\bar{N} = \frac{\rho}{1-\rho}+\lambda w_T\) | \(\text{mean}(N(t))\) |

|---|---|---|---|

| 1.50 | 4/5 | 7.038 | 6.715 |

| 1.25 | 2/3 | 4.531 | 4.409 |

The measured results from the simulation come fairly close to the expected mean queue length \( \bar{N} \).

We can also look at the queueing time \( w \) for various arrival rates. This is just the time interval from when each customer enters the bank until the teller is ready to serve them. It does not include the time spent waiting at the teller, which is 2 seconds walk-up time plus a mean value of 30 seconds for the teller to process the requests. We can measure \( w \) for a large number of customers and then sort the measurements, which approximates the distribution of \( w \):

Some customers get served very quickly, and some have to wait a long time. The minimum queueing time \( w \) is the transport time \( w_T= \) 121.5 seconds, but for high utilization, the waiting time is much longer: nearly an hour in some cases for \( \lambda = 1.80 \) / minute \( (\rho = 0.96) \).

For \( \lambda = 1.25 \) / minute \( (\rho = 2/3) \), it’s not quite as bad, with about 90% of customers waiting less than 5 minutes, and a worst-case of 10 minutes. But that’s still a lot of time compared to the average of 32 seconds spent at the teller.

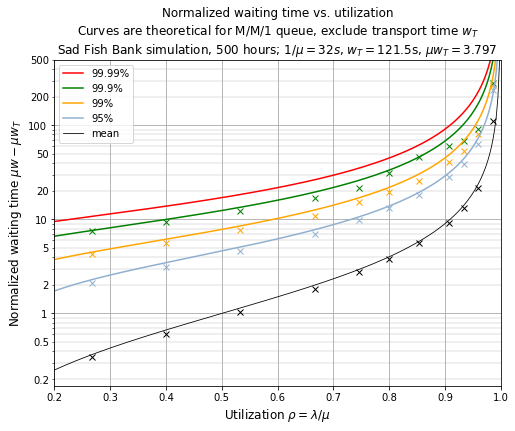

Another way of looking at waiting times is to show the mean waiting time \( \bar{w} \) and high-end quantiles: what is the waiting time \( w_q \) not exceeded by 95% \( (q=0.95) \) or 99% \( (q=0.99) \) or 99.9% \( (q=0.999) \) of the customers? (In other words, if \( q=0.99 \), \( w_q \) is the 99th percentile of waiting times, so that 99% of customers have a waiting time \( w \le w_q \) and the remaining 1% have a waiting time \( w > w_q. \))

This graph plots normalized waiting times \( \mu w \): a value of \( \mu w \) = 1 is the same as the average time spent at the teller \( 1/\mu \) (32 seconds in this case). I have subtracted out the transport time \( w_T \).

The theoretical values of these times (mean waiting time, and waiting time for a given quantile \( q \))[27] are

$$\begin{aligned} \mu\bar{w} &= \frac{\rho}{1-\rho} + \mu w_T \\ \mu w_q &= \frac{1}{(1-\rho)}\ln\frac{\rho}{1-q} + \mu w_T \end{aligned}$$

which I have plotted in the graph above as solid curves, with measured values from 500-hour simulations of Sad Fish Bank shown as x’s. Again: the simulation measurements line up fairly closely with theory, until you get to very high utilizations.

This graph shows us the cost of trying to increase throughput. Theoretically we can run a bank or factory right near 100% utilization \( (\lambda=\mu) \), but the waiting time skyrockets. Increasing throughput increases latency, with very large sensitivity as throughput nears its maximum. If we want to keep our utilization at 80% (\( \lambda = \) 1.50 customers/minute) then we’re making customers spend an average of 4 times as long waiting for the teller as it takes the teller to process their requests — and in Sad Fish Bank, there’s another 3.8 times as long just making them traverse the large waiting space. Don’t you hate when there’s a big line in the airport that winds back and forth?

It would be much better if Sad Fish Bank could limit the customer arrival rate \( \lambda \), and limit utilization \( \rho \) to a lower value. This would reduce the waiting times — as well as cut the maximum queue length, which would allow Sad Fish Bank to use a shorter queueing area, reducing transport delays. At this bank, there are only a few ways to reduce utilization:

- turn away customers when they arrive too frequently (keep \( \lambda \) below a maximum)

- limit the number of customers allowed in the bank, turning away more customers once this limit is reached

- speed up the teller (probably not practical)

- hire more tellers (increase the service rate \( \mu \) — though technically when there are \( m \) tellers in parallel, the behavior is slightly different, forming an M/M/m queue instead of an M/M/1 queue.)

The down side of decreasing utilization (by hiring tellers) is that the bank is paying for resources to process customers. A utilization of less than 40% keeps the waiting time low, but try justifying to the bank’s Board of Directors that their tellers are spending most of their time waiting for customers.

The sum of the waiting time \( w \) and the service time \( x \) is the total system time \( s \), and the average normalized system time \( \mu \bar{s} \) is known as the X-factor in manufacturing, which is the normalized cycle time I showed earlier in the theoretical operating curve graph.

The X-factor is the ratio of total time needed to complete a manufacturing step to the raw processing time.* For example, if a widget waited 16 minutes for a machine that took 8 minutes to paint the widget, that would be an X-factor of \( (16 + 8)/8 = 3. \) An X-factor of 1 means that there’s no wait; cycle time is just the raw processing time needed to complete an operation. It’s possible to determine an X-factor for each machine as well as for the manufacturing process as a whole. X-factors in the 3 – 5 range are typical in semiconductor manufacturing.[28] This tradeoff of latency versus throughput is a tough one to optimize.

(*Fine print: raw processing time should really be considered as the theoretical minimum cycle time, and includes unavoidable delays such as transporting from one machine to another.)

Exponential Service Times, Revisited

I said earlier that the modeling of a bank teller’s service times by a Poisson process (exponentially-distributed) is kind of odd. Imagine a teller who is playing a dice game, throwing five dice every 1.16 seconds, and every time the dice shows a “full house” (three of one number, two of a different number), the teller processes the customer’s request instantly. Odds of a full house are 25/648, so this occurs on average every 1.16 × 648/25 = 30.07 seconds. Or another teller throwing five dice every 23 milliseconds, and whenever the dice are five-of-a-kind (odds \( =(\frac{1}{6})^4 = 1/1296 \)), the customer’s request gets processed, on average once every 0.023 × 1296 = 29.808 seconds. These dice game examples are essentially exponentially-distributed processes — although they are at discrete intervals, whereas a true Poisson process can have any interval of time between events, including 1 nanosecond.

Exponential distributions are very heavily front-loaded. Imagine ordering a grilled cheese sandwich from a diner with exponentially-distributed service times with a mean of 30 seconds. Your grilled cheese sandwich would arrive:

- 15.35% of the time, in less than 5 seconds

- 13.00% of the time, between 5 and 10 seconds

- 11.00% of the time, between 10 and 15 seconds

- 9.31% of the time, between 15 and 20 seconds

- 7.88% of the time, between 20 and 25 seconds

- 6.67% of the time, between 25 and 30 seconds

- 23.25% of the time, between 30 and 60 seconds

- 8.55% of the time, between 60 and 90 seconds

- 3.15% of the time, between 90 seconds and 2 minutes

- 1.58% of the time, between 2 and 3 minutes

- 0.21% of the time, between 3 and 4 minutes

- 0.03% of the time, between 4 and 5 minutes

- 0.0045% of the time, in more than 5 minutes

Very suspicious, indeed — and not very likely for processes that are consistent in nature. Some processes may have completion times that are exponentially-distributed in nature, like telephone calls, or time-to-failure for objects with constant failure rates. But constructing something?

Part of the reason M/M/1 queues, with their exponentially-distributed service times, are studied, may be like that old joke about the man looking for something under a street light:[29]

A few night ago a drunken man—there are lots of them everywhere nowadays—was crawling on his hands and knees under the bright light at Broadway and Thirty-fifth street. He told an inquiring policeman he had lost his watch at Twenty third street and was looking for it. The policeman asked why he didn’t go to Twenty-third street to look. The man replied, ‘The light is better here.’

The Poisson process is the easy case, and you can learn a lot just by studying it even if it’s not exactly what you need. (There are ways to analyze M/G/1 queues with more general service time behavior. Don’t ask me, though, unless it’s just citing basic formulas.)

The other reason to use simplified models based on exponentially-distributed service times is that they might not be that bad an approximation after all. Here’s why:

The Optimist’s Folly

Let’s look at a different random process that I call the Optimist’s Folly.

Suppose you have some kind of machine that processes its input in some time \( t \) consisting of a series of \( N=100 \) steps, each of which is some amount of time \( t_x \) which is either:

- \( t_x = T_0 = \) 0.2178 seconds with probability \( 1-p=0.999 \)

- \( t_x = T_0 + t_V \) with probability \( p=0.001 \), where \( t_V \) is random but uniformly distributed between 0 and \( T_1 = 164.38 \) seconds.

In other words, most of the time, the step takes exactly 0.2178 seconds; but about one out of a thousand times the machine has an intermittent failure which can take almost 3 minutes time to fix.

The mean time and standard deviation of the total time \( t \) are both 30 seconds, matching the mean and standard deviation of the exponential distribution for \( \lambda = 1/T \) where \( T= \)30 seconds. About 90% of the time, the machine doesn’t fail at all, and takes exactly 21.78 seconds to process its input.

The reason I call it the Optimist’s Folly is that most of the time this machine is faster than one which takes 30 seconds to complete its work. But every so often — about 10% of the time — it fails at least once, and unless you were very objective about determining its behavior, you would probably underestimate how quickly it finishes.

For those interested in the math: